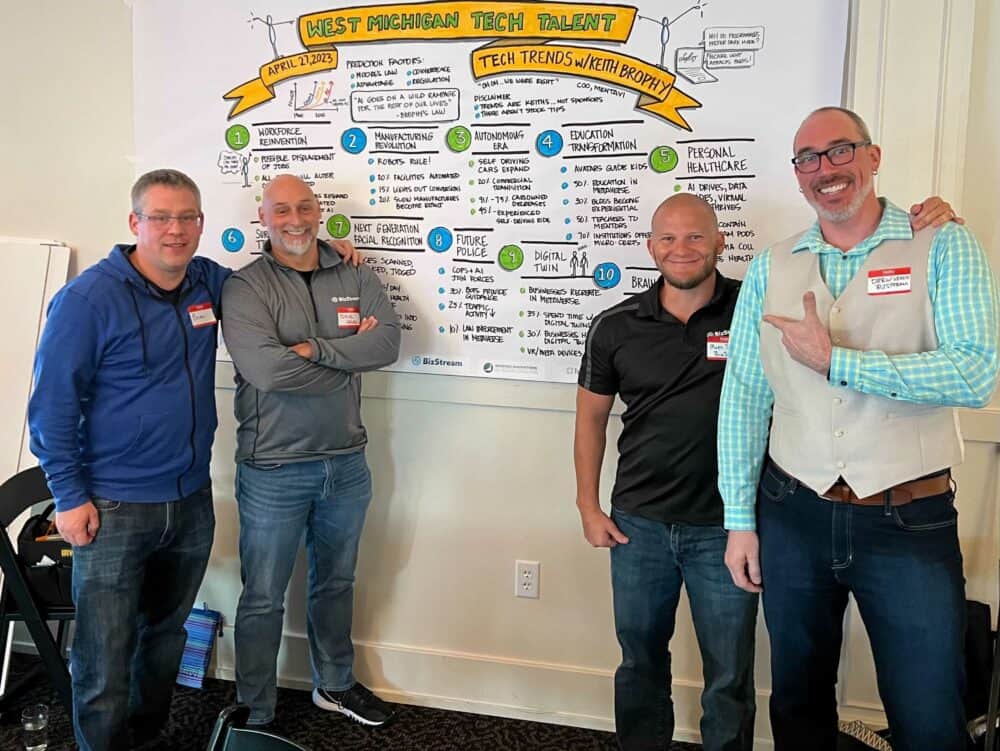

Last week, I attended an event by West Michigan Tech Talent, “Tech Trends with Keith Brophy.” Keith is a futurist, Michigan Entrepreneur of the Year winner, and a long-time speaker on areas of digital transformation, including AI, Machine Learning, the Internet of Things, and Cyber Security.

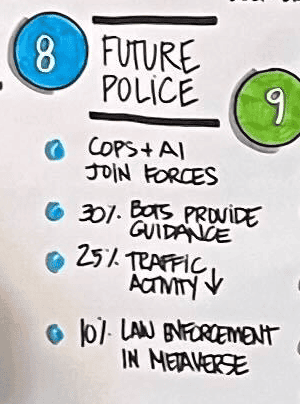

As you can imagine, Keith’s tech trends this year were focused on Artificial Technology (AI) and how it will change the world. This wasn’t a big surprise, as AI, ChatGPT, Bard, and other technology have been filling headlines and social media feeds. What was a big surprise to me was his prediction that AI would change law enforcement.

Keith’s take was that Police and AI would come together, providing guidance to officers in the field to help de-escalate situations. Body cameras utilizing AI technology to read facial expressions and body language with audio feeding in the ear of the officer providing prompts to guide the officer to a better outcome. This was a novel use of AI I hadn’t heard of before – using tech already in use combined with AI to assist in situations where an officer may not have experience or a mental health professional can’t be on site. That use was very interesting and got me thinking of how we could use AI in our YouthCenter Juvenile Justice Case Management software.

AI has been tried in the court system and has had its share of problems. Bias has been a historical issue with AI’s use in the justice system. In 2016, ProPublica highlighted this issue in an article titled “Machine Bias,” which scrutinized predictive policing software (AI) and revealed its racial bias against black individuals. ProPublica isn’t alone in publishing information about AI use in the justice system; both The Atlantic and the UCLA Law Review published articles in 2019 highlighting the risks of perpetuating systemic biases, lack of transparency, and potential for erroneous decision-making when relying on AI algorithms.

We have explored using AI to predict recidivism or the success of a juvenile based on data stored in our system but have yet to put it to use based on the potential pitfalls outlined in the articles mentioned above. If prediction isn’t a fair use of the technology, how else could we use AI to better the experience of our users and help benefit the youth they are working with?

The truth is some of our YouthCenter users are using AI without even realizing they’re using AI, with voice-to-text. With voice-to-text, they’re dictating their case notes rather than typing them in. It’s such a popular feature that I’ve gotten hugs at conferences from caseworkers that utilize voice-to-text to enter their case notes. These case managers or probation officers (POs) are ecstatic because they’re not bogged down by the administrative duties of case management, and it allows them the ability to spend additional time with the youth to coach them to better outcomes.

Voice-to-text is a great way to use AI to benefit the user without introducing bias. We’re not alone in this technology use. Medical startups like Ambience and DeepScribe are transforming the way Doctors, nurses, and other practitioners interact with patients. No more typing data into an EMR; the technology listens, transcribes, and pulls important data from the conversation into electronic medical records (EMR).

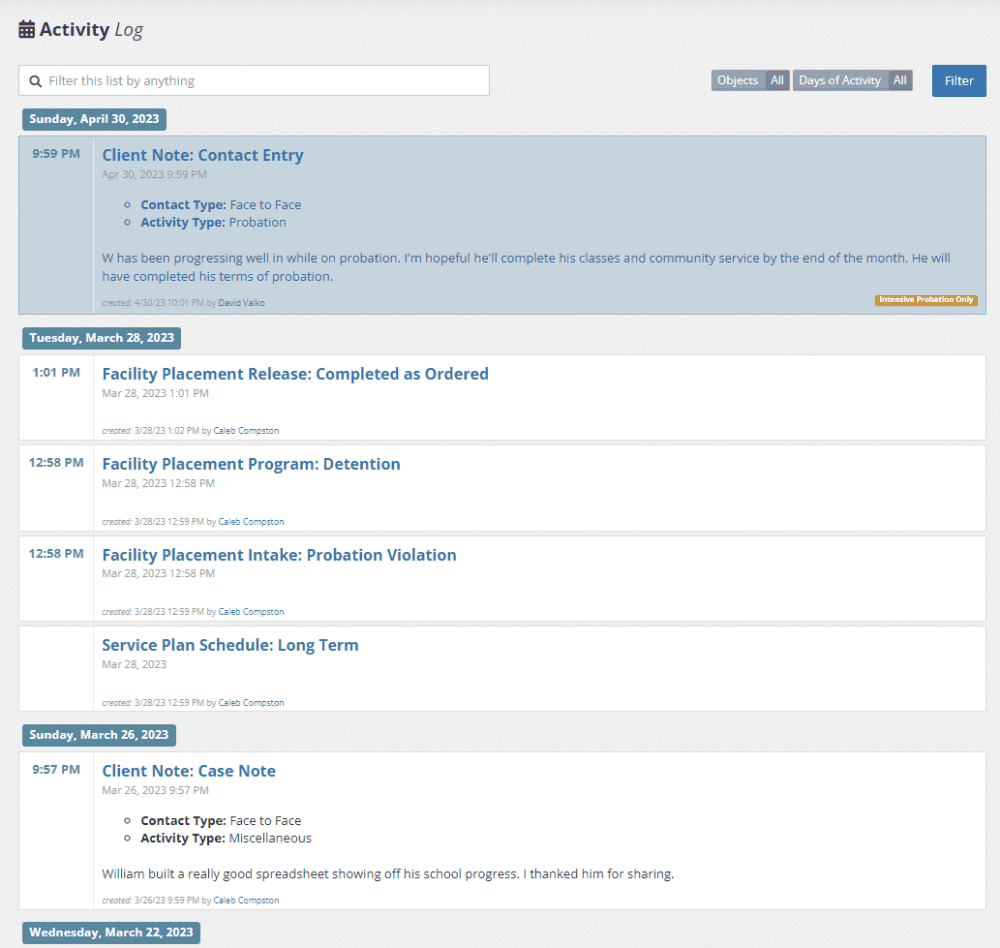

One of the unique features of YouthCenter is the Client Activity Log. The Activity Log is like a Facebook or Twitter timeline of any interaction or event with the youth. Events and entries are listed in chronological order, grouped by date, and ordered by time. The Activity log can be filtered by event or searched. While the Activity log provides a detailed listing of everything, it can be challenging to get a big-picture overview of what’s happening with the youth at a glance.

Large language models, like GPT-3.5 (also known as ChatGPT), are excellent at summarizing complex information. AI-powered systems have the ability to quickly analyze and comprehend vast amounts of data. Data could include case notes, drug tests, program progress, and assessment scores. By processing this information, AI can generate concise and easy-to-understand summaries tailored to the needs of users, in our case, case workers, administrators, or POs. This not only saves time and resources for those involved in the justice system but also helps ensure that critical details are effectively communicated to all relevant parties. The use of AI in this context paves the way for more efficient and informed decision-making, ultimately improving the outcomes for young individuals in the juvenile justice system.

I listen to Spotify’s “Daily Drive” on the way to the office almost every day. It’s a short, customized summary of the day’s news combined with the music I listen to. It’s a great way to start the day. What if I could get a summary of my day sent to me? Imagine if you were a supervisor and could get a short summary of all the clients in your jurisdiction and listen to it on the way to the office. You’re up to speed before you even walk in the door. Combining the AI summary capabilities with text-to-voice could make this possible.

What could make this even more interesting is using technology like Spotify has done with their AI DJ and voice-mimicking technology like Microsoft’s VALL-E to build out a custom YouthCenter secure audio stream. Summaries could then be read in the voice and tone of the primary caseworker working with the specific youth. If you’re a supervisor, you can get an update on the cases your team is working on in the voice of the case manager working with the youth case.

All these new use cases for AI in Juvenile Justice are great, but there are a few things we need to think about, and that’s data security and privacy. Nobody should be copying and pasting any juvenile data into ChatGPT to get summaries. Lucky for us, we have our very own Microsoft Azure MVP on staff here who keeps us up to speed on this technology and issues like privacy.

Our YouthCenter Juvenile Case Management Software is hosted in the Microsoft Azure Government Cloud, which adds additional layers of security for our clients and us. The Azure OpenAI service is also available in the Government Cloud, allowing for the separation of juvenile data from other data sources through enterprise-grade security, role-based access control (RBAC), and private networks. Keeping juvenile data secure and away from others who shouldn’t have access to it is a high priority. We take that security very seriously and will examine any AI technology and its access and sharing before we implement it.

We’re happy to be a partner with Microsoft in AI technology. They’ve developed a Responsible AI Standard that addresses many of the concerns with previous implementations of AI through their six principles for responsible AI: accountability, inclusiveness, reliability and safety, fairness, transparency, and privacy and security. Those 6 principles guide the way in which Microsoft designs, builds, and tests AI systems. Their commitment to inclusiveness, transparency, privacy, and security makes them an ideal partner for us in building AI technology into our juvenile case management system.

The potential for AI to transform the juvenile justice system is huge. There are ways to harness the power of AI responsibly. Focusing on applications like voice-to-text, summarization, and personalized updates, we can improve efficiency, communication, and overall outcomes for young individuals without perpetuating biases. As we implement the technology, we need to be aware of and address data security and privacy concerns.

As we continue to innovate and refine our YouthCenter Juvenile Justice Case Management software, we are excited about the opportunities that AI presents to support caseworkers, probation officers, and, most importantly, the youth they serve. By responsibly integrating AI, we can help to revolutionize the juvenile justice system and create a brighter future for all.

If you’re a YouthCenter client of ours and want early access to the AI capabilities we’re adding, register here.

AI technologies were used to create this article. Tools including Grammarly and ChatGPT were used to shape the article, modify the text, and in some cases, generate text in the article.

We love to make cool things with cool people. Have a project you’d like to collaborate on? Let’s chat!

Stay up to date on what BizStream is doing and keep in the loop on the latest in marketing & technology.